Get in touch

Have you experienced bottlenecks during testing? Let’s be honest for a moment. In many software development projects, software testing still creates a bottleneck that delays the release process. The larger the project, the worse the bottleneck. The frequently touted miracle cure here is “test automation” – in theory, test automation sounds like it should fit the bill: Once implemented, it’s available 24/7. But in practice, many teams despair when deploying test automation, for too often, the resources used do not produce the desired added value. Sometimes this is because test automation doesn’t provide the right information, and/or the automated test cases are not reliable.

Here, we will discuss eight characteristics that ensure that test automation provides the desired added value. Ask yourself the question: How many of the eight characteristics are features of your test automation?

The goal of software testing is to collect important information about the product and reveal its current condition. This information serves as a decision-making basis for everyone involved in the software development process. Simply because a test suite consists of 1,000 automated test cases, this doesn’t mean that the information collected has added value. The central question is whether the test automation provides the right information about the product. In order to assess the quality of the test automation, you should ask yourself the question whether it covers the functionalities classified as critical. The “informative” characteristic should therefore answer the important question of whether you have identified and addressed the critical risks.

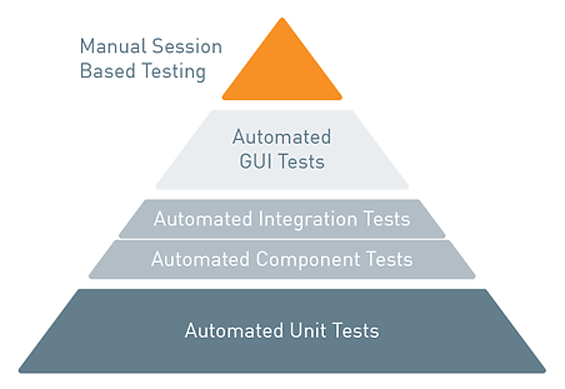

A test case is a question that we ask the application. The more precise the question, the more precise the answer. Before we decide on which test level (unit, integration test, end-2-end) we will implement the automated test case, we should ask the question “Which identified risk am I trying to address and thus minimize with my test case?”

Do I want to minimize the risk

Focused automatic test cases identify whether the actual and target states diverge. After we have identified the risks and the automated test cases address these risks on the suitable test level, we can identify error states more quickly. The characteristic “risk-focused” should answer the question on which test level the identified risks should best be addressed.

A big challenge for test automation is flaky test cases. Flaky test cases fail for no clear reason. Reliable test cases should only fail if the system behavior deviates from the defined target state. This can be very difficult, precisely for automated test cases that are executed via the user interface.

If unreliable test cases are a permanent component of the test suite, this often means that insufficient attention is paid to the test cases. The unreliability causes a loss of trust, for it is assumed that the failed test cases are flaky. And this frequently means that there are no precise analyses since the flaky test cases are regarded as a given. This reduces the added value of test automation. The effort required is no longer proportional to the added value. Generally there are four reasons for flaky tests:

Test automation should be a permanent component of the test strategy. Ideally, there should be continuous coordination of everyone participating in the software development process. On agile teams, the software developer is typically responsible for the unit tests. The integration tests are implemented either by the software developer or the software tester. Usually the software tester is responsible for the automated end-2-end test cases. In practice, however, there is often a lack of coordination among the participants. The software tester does not know what has been and can be addressed on the unit test and integration test levels. Due to lacking communication among the participants, it can be that tests do not address important risks and/or there is redundancy in the automated tests. Redundancy of tests means more maintenance effort and slower test execution.

Automated test cases should be maintenance-friendly. Especially at the beginning of the software development process, the application changes frequently. This makes for a lot of work since the team has to adjust the test cases just as frequently. Appropriate design patterns reduce this maintenance work.

Often it is the case in software development projects that maintaining test automation takes up just as much capacity as implementing new test cases, because the maintenance-friendly aspect was not considered when test automation was implemented.

The automated test cases should provide quick feedback about the quality of the application to be tested. The higher we go in the test automation period (see figure), the slower the automated test cases become. One solution is to execute the automated test cases in parallel, which makes executing the test cases much faster.

The software development project has grown. New features are added incrementally; these create new business processes. These processes could not be considered during the implementation of the test automation. As a result, test cases that address the new business process frequently have to be integrated into the test automation framework, and this is time-consuming. It also conceals the risk of code repetitions, which contradicts the principle “don’t repeat yourself.”

Various roles participate in test automation. This has to be considered during the implementation. The test automation framework should be implemented so that no week-long trainings are required in order to work actively with it. The test automation framework should be self-explanatory.